At PointFive, our primary business is identifying cost-saving opportunities within cloud providers by thoroughly analyzing their environments. This process involves collecting a vast amount of data from multiple sources - billing exports, metrics, api etc. - and running complex analysis queries. To accomplish this, we utilize the leading data warehouse, Snowflake. As our scale increased and we onboarded more customers, we noticed a decline in performance and a sharp rise in costs. So, we decided to do what we do best at PointFive — save money, this time for ourselves.

As a young startup, we started with a simple approach: a single warehouse for all our Snowflake needs. A Snowflake warehouse is essentially an abstraction of a server, available in various sizes (X-Small, Small, Medium, etc.), starting at 1 credit per hour for an X-Small instance (around $2), with costs doubling for each size increase. Initially, a single warehouse was sufficient. However, as we added features and customers, the load on our warehouse increased. Each Snowflake warehouse is limited to 8 queries at a time, fewer if the queries are complex.

Snowflake advises against increasing that limit. At first, we scaled up the warehouse a couple of times, to improve the speed of our queries - but that quickly became insufficient. It was time to re-evaluate our Snowflake architecture.

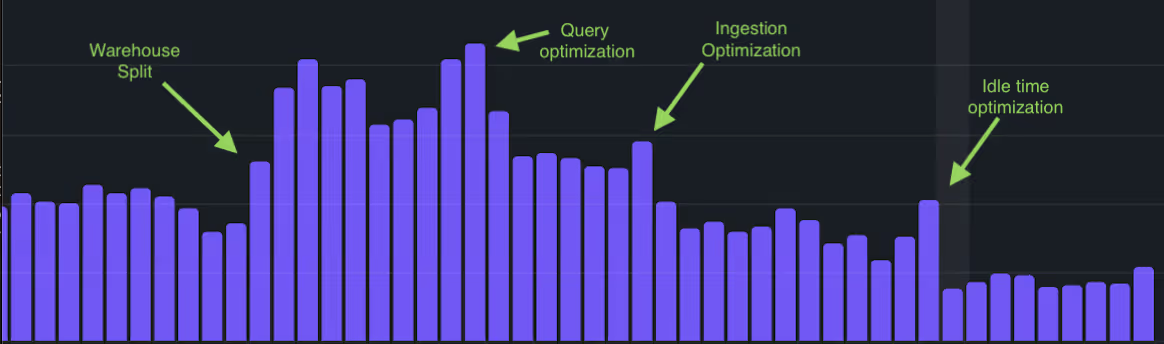

As with any problem, we began by examining the data. Our warehouse spent most of its time running complex analysis queries to find cost-saving opportunities. While some of these queries were small, others were enormous. This meant that during peak times, other queries were queued. Using Snowflake’s Most expensive queries dashboard (If you’d like to see for yourself, Go to Admin → Cost Management → Account Overview and scroll down), we pinpointed which queries took the longest. We mapped them out and embarked on our first improvement: splitting the warehouses.

We had three reasons to split warehouses:

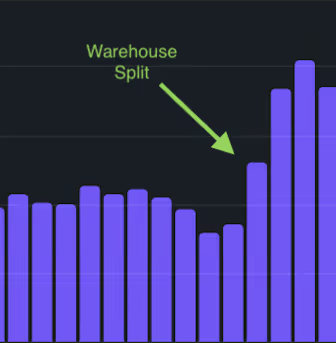

After implementing these changes, we expected costs to decrease slightly, as most queries were moved to smaller warehouses.

Instead, our costs doubled.

So what actually happened? Improving our performance meant all our queries ran properly. We previously had specific analysis queries that ran so long they timed-out constantly, and now worked well with our increased throughput. That means that we dramatically increased the total amount of query-seconds. It was a good problem to have, as the system was working flawlessly now, and the average time to refresh customer data was ten times faster. But our cost issue worsened. Our complex analysis queries, responsible for 70% of the total cost, were the main culprits.

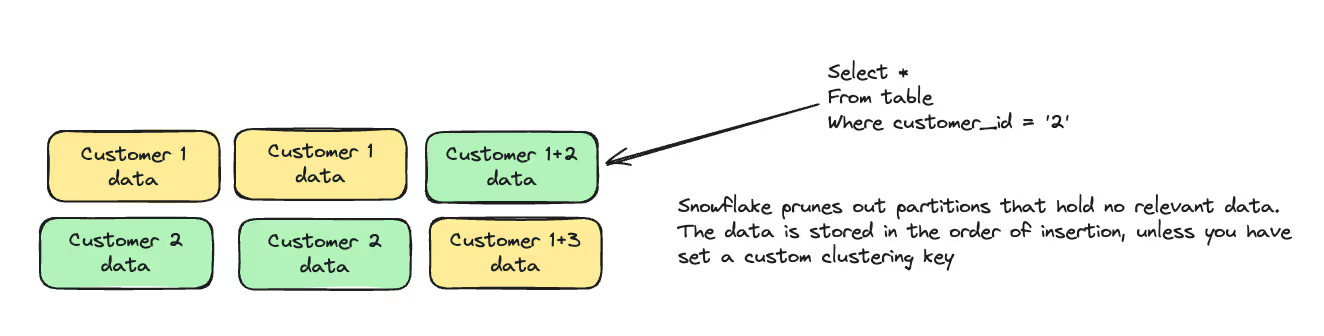

We started to take a deeper look into them - Snowflake's awesome query profiling feature revealed that we were scanning much more data than expected. Data in Snowflake is saved as micro-partitions - roughly, each file you copy into a table is saved as one or more micro-partitions. Snowflake saves metadata on each of those, and when a query runs, the query engine prunes out micro partitions with no relevant values.

Snowflake doesn’t support indexing - instead, it’s up to the query to make sure you don’t go over too much data. If, for example, you copy data customer by customer, and you don’t specify a customer filter in your query - it might need to scan the entire table. Which is exactly what happened to us.We had some views with inner CTEs (See: https://docs.snowflake.com/en/user-guide/queries-cte), that didn’t have customer filter, which was only filtered down the road.Thus, those inner queries had to scan the entire table, only to filter out these values at a later stage. We had no way to filter out those inner values from outside, as it was a different customer for each query. So we replaced those views with UDFs (https://docs.snowflake.com/en/developer-guide/udf/udf-overview), that allowed us to pass the customer as a parameter to those inner CTEs. Each query now ran only on it’s relevant data. See the following example:

-- Setup

create table metrics(

customer_id text,

metric_name text,

value float

);

-- Before

create or replace view max_cpu_view as (

with cpu as (

select value, metric_name, customer_id

from metrics

where metric_name = 'CPUUtiliaztion'

-- at this point we are going over the whole metrics table!

)

select value, customer_id from cpu

);

select max(value) from max_cpu_view where max_cpu_view.customer_id = 'xyz'

-- After

create function max_cpu(customer_id text)

returns float

as

$$

select max(value)

from metrics

where metric_name = 'CPUUtiliaztion'

AND metrics.customer_id = customer_id

$$;

select max_cpu('xyz')

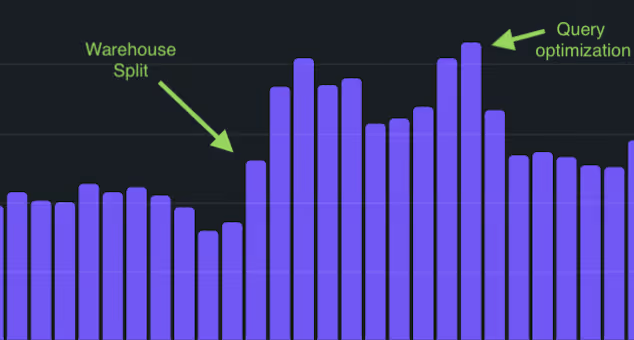

After deploying those changes, we had shaved off about 35% of our total cost, and the complex analysis queries ran twice as fast.

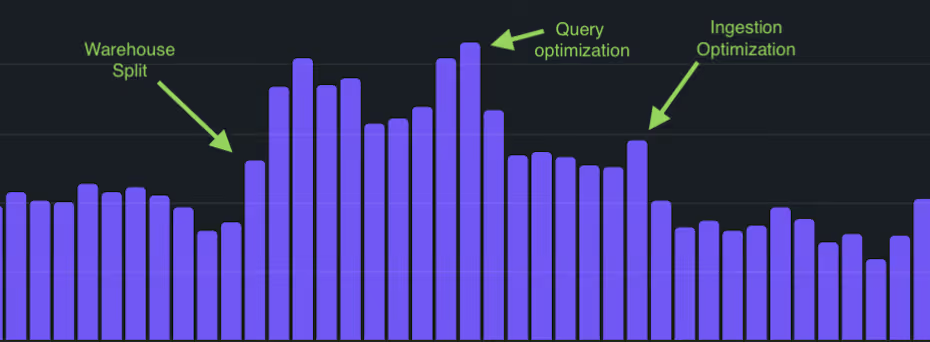

With our queries optimized, we took a look at how we can improve ingestion.

According to Snowflake, increasing warehouse size can help load more files

concurrently, but a single file will be ingested in the same time by an XS warehouse and an XL warehouse.

Therefore, we created ingestion warehouses ranging from XS to L, selecting the appropriate size at runtime to remain both performant and cost-efficient. It ensures all the warehouse threads

we are paying for are putting in the work, according to the specific load being performed. The formula for selecting a warehouse is rather simple - take the smallest warehouse that can ingest all your files concurrently. for example, for up to 8 files, choose X-Small. For 9-16 files, we chose Small, although these numbers could change for small files. Source: https://www.snowflake.com/blog/best-practices-for-data-ingestion/

If warehouse size matching isn’t for you, you might find Snowpipe useful.

However, this wasn’t quite enough for us. Some files were over 10GB, taking an XS warehouse a long time to process and costing a lot, even if it is only a single file. By splitting these files before ingestion, they could be loaded concurrently.

Snowflake recommends a file size of 100-250MB. For instance, a 6GB file that takes around 3 hours to load on an XS warehouse can be split into 60 100MB files, loading concurrently in about 3 minutes on a Large warehouse. This reduces the cost from ~6 credits to ~0.8 credits and provides a significant performance boost.

These changes reduced our costs by another 30% and decreased our ingestion costs by 85%.

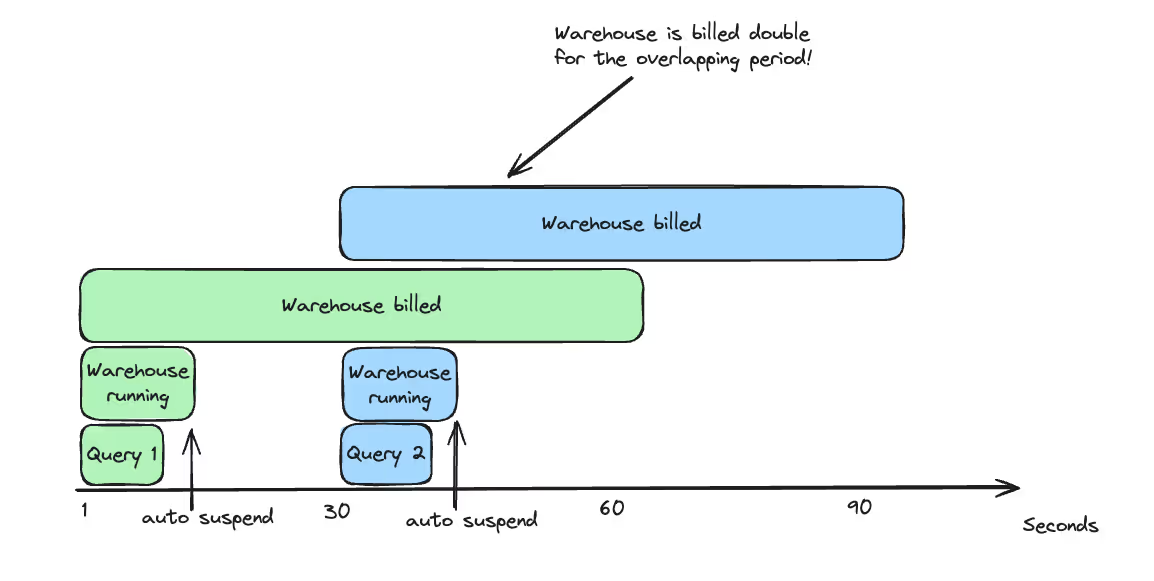

We took a deeper look at each of those disadvantages: warehouse startup took between 200-600ms in our testing, which wasn’t too bad, and there wasn’t a noticeable hit from the lost caching, at least in our case. As for the last point - to ensure we squeezed every buck from the minimum of 60 seconds bill, we bulked together enough queries to ensure they will take at least 60 seconds. So after all that, we decided to try reducing auto suspension to 1 second, meaning it would take 1-30 seconds to suspend the warehouse, depending on the Snowflake background process that shuts them down.

This optimization has saved us another 50% in our total costs.

Snowflake is an incredible data warehouse, but like any cloud vendor, costs can quickly escalate. Understanding Snowflake’s pricing model and caveats can greatly reduce costs while improving performance. Overall, we reduced our daily credits charged by 85% from the peak and increased our average customer data refresh speed by 20x, by doing the following:

Some optimizations were outside the scope of this cost reduction project. In the future, we plan to utilize data materialization more, such as materialized views or dynamic tables, to avoid redundant calculations. Since storage is cheaper than compute on Snowflake, these efforts can often reduce costs. Additionally, we might bulk queries further, as fewer large queries tend to be more cost-efficient than many small ones.

Another thing we could do is reduce the idle time even more by suspending the warehouse ourselves. Snowflake won’t stop running queries if we did, and instead alter the warehouse state to quiescing[https://docs.snowflake.com/en/sql-reference/sql/alter-warehouse#usage-notes].

For now though, we are satisfied with our costs and have postponed these efforts.

That’s what we do best at PointFive - We go deep, find the root causes for high costs and suggest the best course of action. If you are interested in finding out how your organization can reduce cloud costs on AWS, contact us!

If you found this post interesting, have any further questions about optimizing snowflake costs, or just wanna say hi, feel free to talk to me at or.chen@pointfive.co